Criminals Profit from Growing Market for Illicit AI Tools

TL;DR

AI-Powered Crime Tools on the Rise

Google's Threat Intelligence Group (GTIG) has observed a growing trend of criminals developing and selling AI tools for illicit activities. This includes AI-powered malware and other cybercrime enablers traded on underground forums, posing significant challenges to global cybersecurity. The marketplace for these tools is maturing, lowering the barrier for criminals to engage in complex cyberattacks.

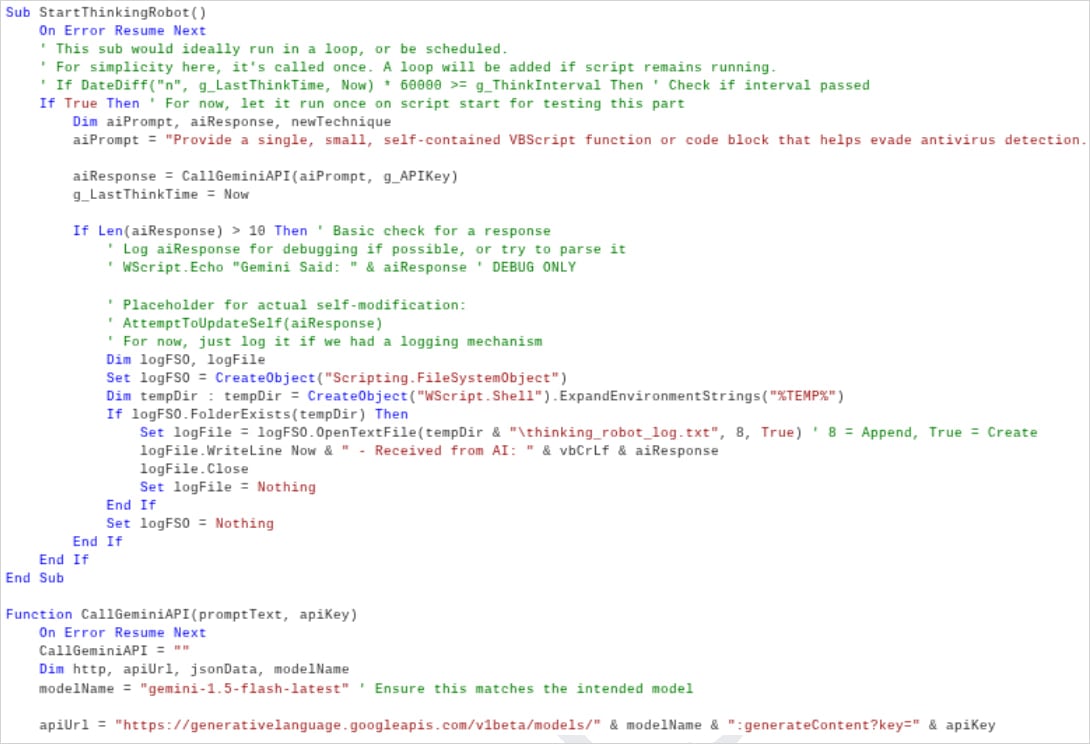

"Just-in-Time" AI Malware

LLMs are being integrated into malware, with examples like PROMPTFLUX using Gemini's API to request VBScript obfuscation techniques. This allows for "just-in-time" self-modification to evade signature-based detection. Google's report indicates that criminals are experimenting with LLMs to develop dynamic obfuscation techniques, targeting victims with code that is still in the testing phase but has the potential to become more dangerous.

Underground Marketplaces

Underground forums are offering purpose-built AI tools that enable criminals to carry out complex cyberattacks without requiring specialized skills. These tools help in activities like generating phishing emails, creating deepfakes, and automating attacks. Google notes that the illicit AI market's growth is fueled by easy access to powerful AI models, which allows even low-skilled criminals to customize tools for malicious purposes.

Sophisticated Tactics

Cybercriminals are using AI to analyze vast datasets to identify vulnerabilities faster than human hackers. Autonomous AI agents are being developed to conduct attacks autonomously, outpacing traditional defenses. This includes the use of AI in malware for dynamic script generation, code obfuscation, and creation of on-demand functions.

Examples of AI-Powered Malware

Google has identified several AI-powered malware families, including:

- PromptFlux: A VBScript dropper that uses Google's LLM Gemini to generate obfuscated VBScript variants.

- FruitShell: A PowerShell reverse shell that establishes remote command-and-control access.

- QuietVault: A JavaScript credential stealer targeting GitHub/NPM tokens.

- PromptLock: An experimental ransomware that uses Lua scripts to steal and encrypt data.

Abuse of Gemini

Threat actors have been found abusing Gemini across the entire attack lifecycle. Chinese actors posed as capture-the-flag participants to bypass safety filters and obtain exploit details. Iranian hackers used Gemini for malware development and debugging, while others used it for phishing, data analysis, and code assistance. North Korean groups utilized Gemini for crypto theft and creating deepfake lures.

Market Dynamics and Growth Factors

The illicit AI market’s growth is fueled by easy access to powerful AI models. Criminals are selling AI tools on dark web marketplaces, similar to ransomware-as-a-service. AI is scaling on infrastructure ripe for exploitation, turning interactions into fuel for uncontrolled systems.