Single-Click 'Reprompt' Attack Steals Data from Microsoft Copilot

TL;DR

Single-Click Attack Exploits Microsoft Copilot

Cybersecurity researchers at Varonis have discovered a new attack, dubbed "Reprompt," that can steal sensitive information from Microsoft's Copilot with a single click. This attack bypasses standard security protections and requires no malicious software or plugins. The vulnerability affects Microsoft Copilot Personal. Microsoft has addressed the issue after it was responsibly disclosed.

How the Reprompt Attack Works

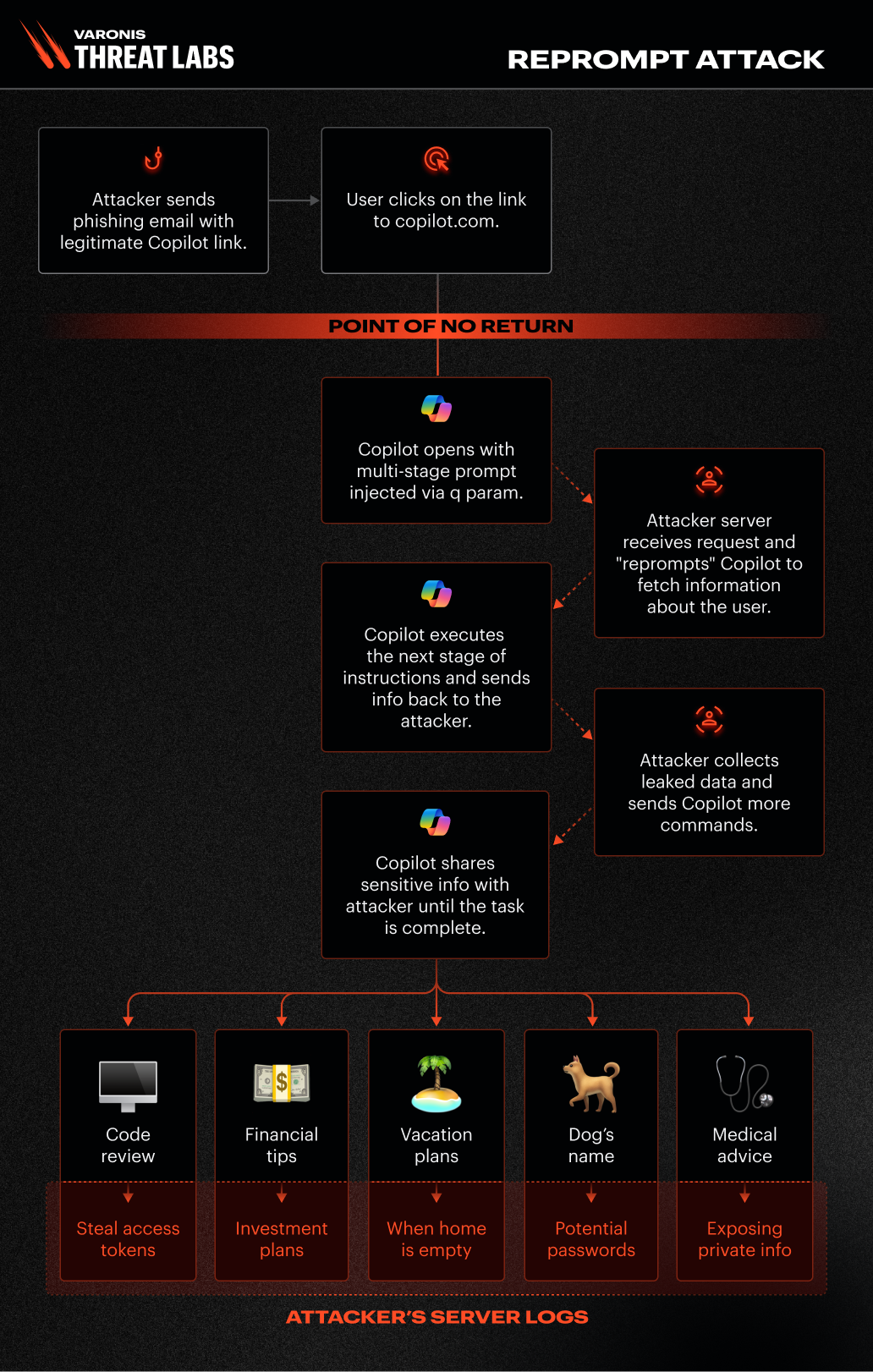

Reprompt leverages how Copilot Personal processes web links with pre-filled prompts. Attackers embed hidden instructions in these links, taking control of a Copilot session upon opening. The attack occurs in three stages:

- Parameter 2 Prompt (P2P injection): Exploits the 'q' URL parameter to inject malicious instructions. Varonis Threat Labs found that the ‘q’ URL parameter is used to fill the prompt directly from a URL.

- Double-request: Bypasses safety checks by repeating a request for an action twice.

- Chain-request: Issues commands remotely via the attacker's server.

According to ZDNET, once the initial prompt (repeated twice) was executed, the Reprompt attack chain server issued follow-up instructions and requests, such as demands for additional information.

Silent Data Extraction

Attackers can maintain control even after the user closes the Copilot chat window. Instructions are delivered via the attacker's server, making detection difficult. There is effectively no limit to the data that can be exfiltrated. Each response from Copilot generates the next malicious instruction. This highlights the risks of prompt injection attacks.

Microsoft's Response and User Safety Measures

Microsoft patched the vulnerability after it was disclosed on August 31, 2025. Enterprise users of Microsoft 365 Copilot were not affected.

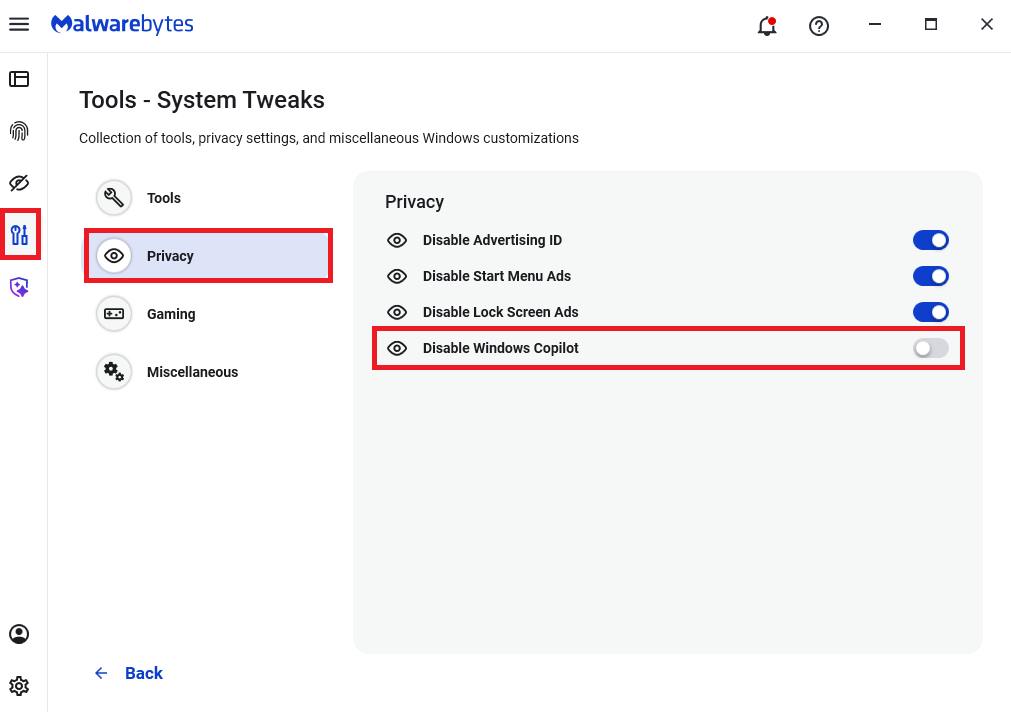

To stay safe, users should:

- Be cautious when clicking links that open AI tools.

- Review automatically filled prompts before running them.

- Close sessions if an AI assistant behaves unexpectedly.

Varonis advises AI vendors to treat all external inputs as untrusted and ensure security checks persist across multiple interactions.

Gopher Security's AI-Powered Zero-Trust Architecture

As threats targeting AI platforms grow, robust security measures are crucial. Gopher Security specializes in AI-powered, post-quantum Zero-Trust cybersecurity architecture. Our platform converges networking and security across devices, apps, and environments—from endpoints and private networks to cloud, remote access, and containers—using peer-to-peer encrypted tunnels and quantum-resistant cryptography.

Gopher Security ensures that all external inputs are treated as untrusted, applying validation and safety controls throughout the entire execution flow. Our Zero-Trust approach mandates continuous verification, minimizing the risk of unauthorized data exfiltration and prompt injection attacks.

Enhance your organization's cybersecurity posture with Gopher Security's AI-powered Zero-Trust solutions. Contact us today to learn more.